A new addition for your hydrological toolbox

by Thibault Hallouin and Maria-Helena Ramos

As hydrological modellers, we routinely assess the merits of our model predictions using our favourite metrics. Our taste in metrics is largely shared within the community: the likes of the Nash-Sutcliffe Efficiency (NSE) and the Kling-Gupta Efficiency (KGE) are often our go-to’s when dealing with model simulations, whereas the Brier score and the Continuous Ranked Probability Score (CRPS) are popular choices amongst us for assessing ensemble forecasts.

While the computation of some evaluation metrics is straightforward and unambiguous, others rely on assumptions and approximations. This opens the door for discrepancies in how they are computed by different modellers.

Take the CRPS for example. It can be obtained in different ways: from the integration of the empirical cumulative distribution function (CDF) along the x-axis (i.e. the measured variable values), or along the y-axis (i.e. the cumulative probabilities), and even from the integration of an infinity of Brier scores for all possible exceedance thresholds. While they all are mathematically equivalent on continuous distributions, the distribution formed by the ensemble forecasts is only a discrete estimate for the continuous distribution. This results in numerical differences between the different formulations.

Beyond the computation of the metrics themselves, the preliminary processing of the model predictions (e.g. handling of missing data, data transformation, or selection of extreme events) and the subsequent processing of the computed metrics (e.g. sensitivity analysis or uncertainty estimation) is subject to further assumptions. For instance, when using an inverse or a logarithm transformation on streamflow data to put more emphasis on low flows, the problem of streamflow values of zero needs to be dealt with. Some modellers may add a small constant value to the streamflow data to bypass this problem, while others may employ other approaches.

These are only some of the many discrepancies that will inevitably exist between hydrological modellers. One unfortunate consequence of these can be the difficulty in reproducing the results obtained by our peers.

There are a variety of tools out there that can be used to compute evaluation metrics, e.g. EVS in Java (Brown et al., 2010), verification and scoringRules in R, ensverif and properscoring in Python. But here is another hurdle to reproducibility: we do not all use the same programming language!

This is how the idea of producing a new evaluation tool for hydrologists emerged, and a little while later, EvalHyd was born.

EvalHyd comes with four satellite bindings: for C++, Python, R, and a command line interface.

This is a tool that is polyglot, i.e. it can be used in various popular open source programming languages (Python, R, C++) and as an executable from the command line for those amongst us not using these languages. These are bindings around a compiled core in C++ in order to be computationally efficient.

It features all the favourite metrics of ours mentioned before, both for deterministic and for probabilistic evaluation. It also offers handy functionalities that can be useful for hydrological evaluation (e.g. temporal and conditional masking, data transformation, uncertainty estimation).

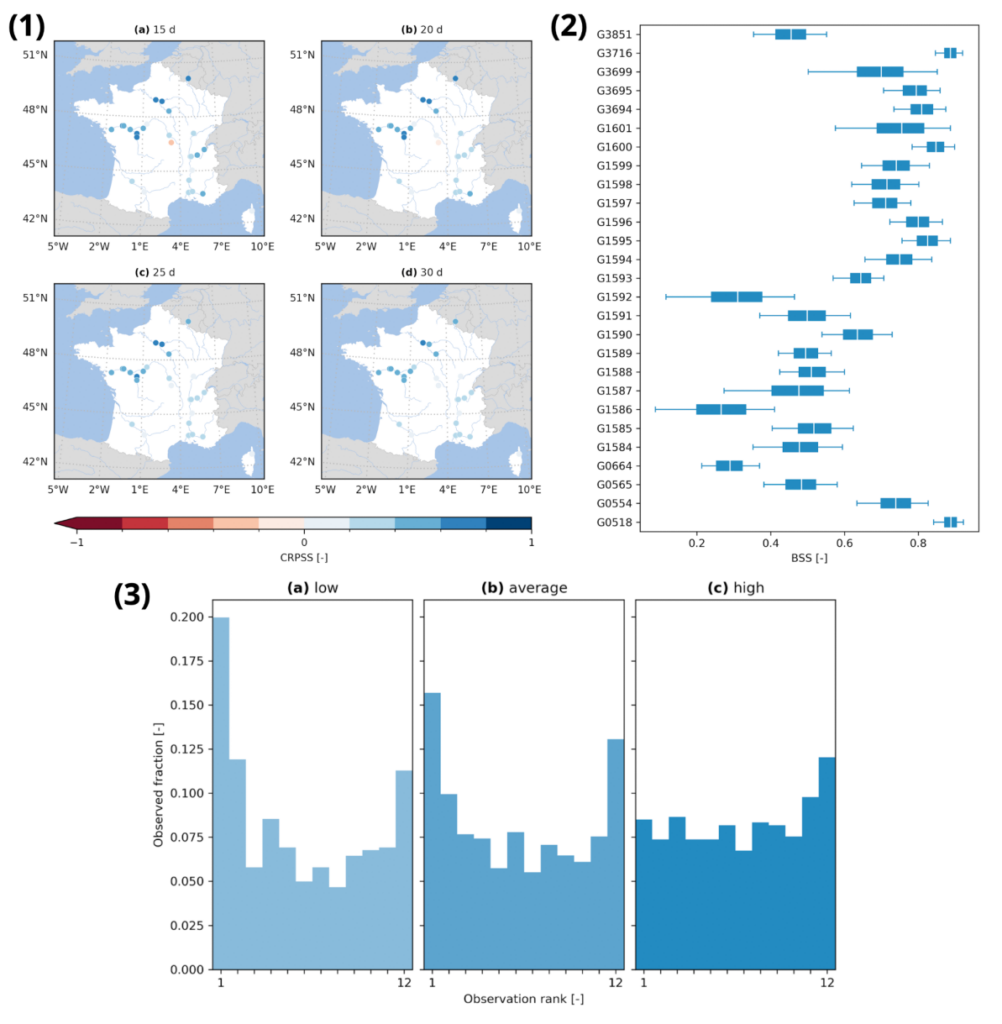

Three examples of probabilistic analyses that can be easily performed with EvalHyd: (1) a spatial distribution of Continuous Rank Probability Skill Scores (CRPSS) against a climatology benchmark, (2) an uncertainty estimation through boxplots of Brier Skill Scores (BSS) on a 20th percentile threshold against a climatology benchmark, (3) stratified rank histograms for various flow ranges. These figures are extracted from Hallouin et al. (2024), therefore more details about them can be found directly in the publication.

The interfaces are kept as identical as possible across the various bindings so that we can help each other (even if we do not use the same programming language), and so that one can keep using the tool even if they have to start using another language when they join a new lab.

The tool is open source and comes with online documentation. So why don’t you give it a try? Hopefully, going beyond programming language barriers will stimulate collaborations between research labs in view to share best practices in hydrological evaluation. Community contributions are very welcome on the related GitLab repositories!

0 comments