HEPEX Science and Challenges: Verification of Ensemble Forecasts (1/4)

Contributed by Julie Demargne and James Brown

What do we mean by forecast verification?

Forecast verification is the process of assessing the quality of a forecast product or a forecast system. All forecast systems, whether deterministic, ensemble or probabilistic, are subject to errors and biases (i.e., systematic errors). Thus, hydrologic forecasts are incomplete if their quality is unknown or not communicated to forecasters and end users. The efficiency and effectiveness with which forecast products are delivered to end users is also important for operational forecasting agencies.

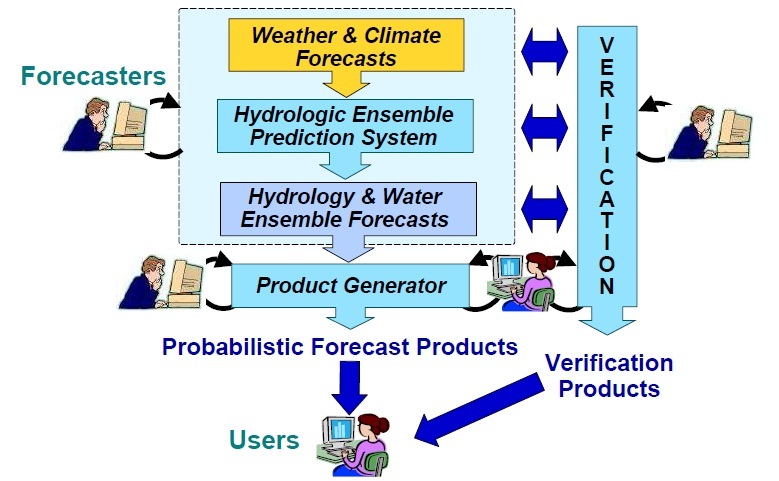

Hydrologic verification: from weather and climate forecasts to river and water forecasts (from: Demargne et al., HEPEX Workshop – Toulouse, France, 15-18 June 2009)

What are the goals of verification?

In operational forecasting, verification includes (e.g., Demargne et al. 2009):

- assessing the quality of delivered forecast services in terms of the forecast usability and the service efficiency (e.g., number of forecasts locations, types of forecasts, spatial and temporal scales, effort for issuing forecast products, forecast timeliness). This is referred as forecast services evaluation and could be helpful for operational agencies to share successes and failures in delivering forecast services;

- evaluating the quality of the forecast which is defined as the degree of correspondence between the forecasts and a reference. Typically, the reference comprises observations, but may involve model outputs, including model outputs that help to isolate a particular source of error (e.g., hydrologic simulations). This is referred to as forecast verification.

Forecast verification includes two main activities: diagnostic verification and real-time verification.

Diagnostic verification aims to describe the quality of past forecasts or reforecasts to help forecasters and modelers improve the forecast system and processes.

In real time, data mining the diagnostic verification information and past (re-)forecast archive, conditionally upon the live-forecast, could help forecasters and end users predict the quality of a live forecast (before the outcome is observed) from the quality of historical analogs. Real-time verification could lead to adjusting the forecast or its use in a downstream application based on forecast analog information or to gauging the suitability of an automated adjustment (i.e., statistical post-processing).

Forecast verification helps to answer the following questions:

- How suitable are the forecasts for a given application? For example, are they sufficiently unbiased for the decisions to be made? Are they sufficiently skilful compared to a reference (i.e., baseline) forecast system to justify the method in use?

- What are the strengths and weaknesses of the forecasts? What is the forecast quality under different conditions, such as high flow vs. average flow, for particular seasons or initial states of the atmosphere or hydrologic system?

- What are the key sources of uncertainty and bias in the forecasts? This may involve verifying the forecasts against reference datasets that omit particular sources of uncertainty (e.g., hydrologic simulations).

Ensemble or probabilistic forecasts contain more information than single-valued forecasts. Ensemble forecasts may be verified as empirical distribution functions or by fitting a smooth distribution function. Verification may focus on a measure of central-tendency (e.g., the ensemble mean or median), an integral of the error distribution or on discrete statements derived from the probability distribution, such as the probability of flooding. In general, verifying a measure of central tendency of the forecast distribution is inadequate, as that will not consider the higher moments, such as the forecast spread.

The complete list of references can be found here.

This post is a contribution to the new HEPEX Science and Implementation Plan.

See also in “HEPEX Science and Challenges: Verification of Ensemble Forecasts”:

0 comments