Why are meteorologists apprehensive of ensemble forecasts?

Contributed by Anders Persson, Uppsala, Sweden

A colleague in my world-wide meteorological network made me aware of a CALMet conference in Melbourne, i.e. dealing with meteorological education and training. Through the website you can access the program with more or less extensive abstracts. I have no doubt that most presentations were relevant and interesting, but what surprised me was that a search for the key words “probability” or “ensemble” gave no hits. “Uncertainty” came up in only one (1) presentation, no 36 “To communicate forecast uncertainty by visualized product” by Jen-Wei Liu and Kuo-Chen Lu from the Central Weather Bureau in Taiwan.

This made me again ponder over the question why meteorologists still are apprehensive of ensemble systems (ENS) and probability forecasting.

1. Ensemble forecasting brings statistics into weather forecasting

Since the start of weather forecasting as we know it (in the 1860s), there has always been a rivalry between physical-dynamic-synoptic and statistical methods. Edward Lorenz’s famous 1959 experiment when he discovered the “butterfly effect” was part of a project in the late 1950’s to find out if statistical methods could be as effective in weather forecasting as numerical techniques. The answer was at the time not as clear-cut, but during the 1960’s, the numerical weather prediction (NWP) made much larger advances than the statistical approaches. Statistical methods were thereafter only used to calibrate NWP in what became known as MOS (model output statistics).

Over a lunch at ECMWF Edward Lorenz, on one of his annual visits in the 1990s, told us a parable he had got from the renowned Norwegian meteorologist Arnt Eliassen:

All the world’s birds wanted to compete who could fly the highest. They all set off ascending, but one after the other they had to drop out. Finally, only the great golden eagle was left. But as also he had to stop in order to return, a little sparrow who had been hiding in his feathers came out and managed to beat the eagle by a meter or two. The eagle is the dynamic NWP Eliassen had told Lorenz (who told us), the sparrow the statistical MOS.

All the world’s birds wanted to compete who could fly the highest. They all set off ascending, but one after the other they had to drop out. Finally, only the great golden eagle was left. But as also he had to stop in order to return, a little sparrow who had been hiding in his feathers came out and managed to beat the eagle by a meter or two. The eagle is the dynamic NWP Eliassen had told Lorenz (who told us), the sparrow the statistical MOS.

To some extent the MOS can deal with uncertainties, but in a limited way since it is based on a deterministic forecast. It can estimate the general uncertainty at a certain range, but not distinguish between more or less predictable flow patterns. This is the strength, the core value of the ENS.

But ensemble forecasts are essentially statistical, probabilistic, and the meteorological education have always avoided to venture into this domain, except for those who wanted to become climatologists which in the old days was looked down upon. The ideal has been a physical-dynamic “Newtonian” approach, where perfect or almost perfect forecasts were seen as possible, if only the meteorological community got enough money to purchase better computers.

Indeed, it has paid of; the predictability range has increased by about one day per decade. Our five day deterministic forecasts today are as good and detailed as the two day forecasts in the 1980s. But also the demands and expectations from the public has increased. Even if we in a few decades from now can make more accurate and detailed seven day forecasts, there will still be questions about their reliability. The problem of uncertainty estimations will always be with us.

2. The ensemble system is a Bayesian system

But also among those meteorologists who are used to statistics, there is another problem. I became aware of that when I traveled on behalf of ECMWF to different Member States. A frequent question was: -How can you compute probabilities from those 50 members when you are not sure that they are equally likely?

My answer then was that we did not know! We did not know the likelihood of every member and we didn’t even know if they were all equally likely (probably they were not). But the verification statistics were good, and they would not have been so good if our assumption had been utterly wrong.

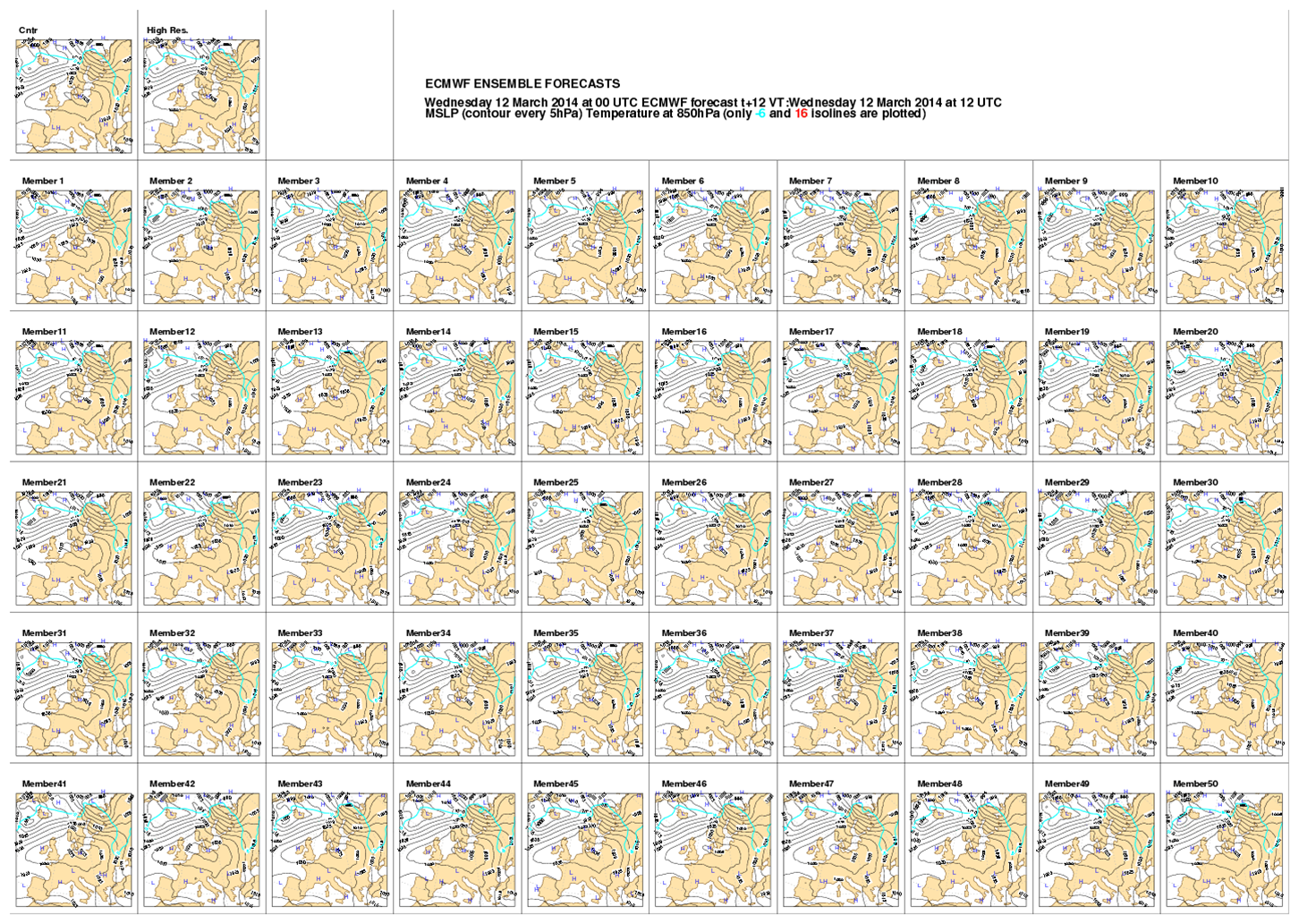

A typical “postage stamp map” from the ECMWF system. These 50 forecasts are not a priori equally likely, but since we do not know the probability of each of them we have to apply Laplace “Principle of insufficient reason” and assume that they are equally likely – an assumption which makes the system Bayesian. Image courtesy of ECMWF.

Only later I was made aware that my answer was the same as Siméon de Laplace had given two centuries earlier, when he was developing what is today known as “Bayesian statistics”: – We do not know, but make a qualified guess and see how it works out. Bayesian statistics, in contrast to traditional “frequentist” statistics, acknowledges the usefulness of subjective probabilities, degrees of belief. Laplace’s answer, which I unknowingly resorted to during my ECMWF days, is known as “Laplace principle of indifference”.

So part of the apprehension to ensemble forecasting cannot be attributed to ignorance, conservatism or “Newtonianism”, but has its basis in a long standing feud between “Bayesian” and “frequentist” statisticians. A “Bayesian” can look at the sky and say “there is a 20% risk of rain” whereas a frequentist would not dare to say that unless he had a diary which showed that in 34 cases out of 170 with similar sky, wind and pressure rain has occurred.

In recent years the gulf between “frequentist” and “Bayesians” has narrowed. Also, the calibration of the ENS data “à la MOS”, “washed away” much of the Bayesian characteristics and provided a more “frequentist” forecast product.

3. What is left for the forecaster?

Bayesian methods should not be alien to experienced weather forecasters. Since weather forecasting started in the 1860s there has been a strong Bayesian element in the routines, perhaps not described as such, but never the less this is how forecasters worked before the NWP. Who else but an experienced forecaster could look at the sky and give a probability estimate of rain? If the forecaster had a weather map to look at, the estimation would be even more accurate. Verification studies in the pre-NWP days in the 1950’s showed that forecasters had a good “intuitive” grasp of probabilities.

But with the advent of deterministic NWP the “unconscious” Bayesianism among weather forecasters evaporated gradually. The NWP could tell very confidently that in 72 hours time it would be +20.7 C, WSW 8.3 m/s and rain 12.4 mm within the coming six hours?

Anybody could read that information, your didn’t need to be a meteorologist. But you needed to be a meteorologist to have an opinion about the quality of the forecast: -Would it perhaps be cooler? The wind weaker? How likely is the rain?

There are currently more weather forecasters around than at any time before, in particular in the private sector where advising customers about their decision making is an important task (Photo from a training course at Meteo Group, Wageningen. Permission to use by Robert Muerau)

The risk was always that this forecast, even against the odds, would verify. So wasn’t it most tactical to accept the NWP? After all, if the forecast was wrong, the meteorologist had something to put his blame on. Some meteorologist took this easy road, but most tried to use their experience, knowledge of the models and meteorological know-how, to make a sensible modification of the NWP, including the reliability of the forecast. If the last NWP runs had been “jumpy” and/or there were large divergences among the available models.Tthis was taken as a sign of unreliability.

The “problem” for the weather forecasters was that with the arrival of the ENS they were deprived of even this chance to show their skill. The “problem” with a meteogram from ENS, compared to a more traditional deterministic from a NWP model, was that “anybody” could read the ENS meteogram! You didn’t need to be a meteorologist, not even a mathematically educated scientist. Einstein’s famous “grandma” could read the weather forecast and understand its reliability!

“You do not really understand something unless you can explain it to your grandmother.” – Albert Einstein

So what is left for the meteorologist?

I will stop here, because this text is already long enough. But the question above is really what educational and training seminars, conferences and workshops should be more focused on. I am personally convinced that the meteorologists have a role to play.

My conviction is based on my experiences from the hydrological forecast community, in particular the existence of this site. Is there any corresponding “Mepex“?

My conviction is based on my experiences from the hydrological forecast community, in particular the existence of this site. Is there any corresponding “Mepex“?

My conviction is also based on my experience as a forecaster myself, how the general public (and not so few scientists) need help to relate the uncertainty information to their decision making.

My conviction is finally based on my experiences from history that new tools always make traditional craftsmen more effective and prosperous – provided they are clever enough to see the new opportunities. Else they will miss the bus . . .

November 13, 2017 at 23:37

After a decade or so of using multiple sourced ensemble forecasts (EC UK and NCEP), and 30 years or so of using “poor mans ensembles” of multiple countries output, I offer the following comments. Often ensembles follow the characteristics of the model and can lean towards the control run and are not “independent solutions”. Often the best higher resolution operational models verify better. Multi model ensembles can hide useful solutions, for example when a weaker model is diluted by others. Always remember that the final verifying analysis can be a solution which is outside of the ensemble envelope. Agreement between solutions does not mean the solution is correct.

Skill in using ensemble techniques can be developed and “intuition” concerning which solutions are possible can be usefully deployed by Forecasters as well as automatic systems.

November 14, 2017 at 12:46

These are interesting comments by anonymous T2mike, in particular since I have heard them before many times during the last 25 years. But . . .

a). “The ensembles follow the characteristics of the model and can lean towards the control run”, and are not ‘independent solutions'”.

There are two problems with this statement:

1: If the spread is small (highly predictable situation) the members will of course lean towards the control.

2: The “independence” or non-correlation is with respect to the deviations from the ensemble mean. If there is depenndence, the system is faulty, but T2mike does not provide any evidence that this is the case.

b) “Often the best higher resolution operational models verify better.”

There are three problems with this statement:

1. How do we in an operational context know which deterministic model is “best”?

2. How does T2mike compare verifications of deterministic models and ensembles?

3. And again, where are these verifications or even daily logs?

c) “Always remember that the final verifying analysis can be a solution which is outside of the ensemble envelope.”

The only problem with this statement is that since the introduction of ensembles 25 years ago we have repeatedly told the users that for an ensemble of N members the verification will be outside 2/N % of the time. For a 50 member ensemble this means 4% of the time, i.e. about one day per month, for a 20 member ensemble 10% of the time or three days per months.

d) “Agreement between solutions does not mean the solution is correct.” See c) above. For “poor man’s ensembles” of 2-5 models this is even more true.

e) ” Multi model ensembles can hide useful solutions, for example when a weaker model is diluted by others.” and “Skill in using ensemble techniques can be developed and ‘intuition’ concerning which solutions are possible can be usefully deployed by Forecasters as well as automatic systems.”

The problem with these sentences is that I do not quite understand what T2mike means. How is a weaker model “diluted” by others. How can “intuition” be developed? Any examples?

While writing down this comment it struck me that these types of loose, unsubstantiated critical opinions have been around, not just the last 25 years, but also during the 25 years (1965-90), but then directed towards the deterministic numerical weather prediction models. I was a forecaster at the time and gradually became highly sceptical about this kind of loose talk. The NWP and the ENS had, and still have, their problems, but they are easily detected and fixed in due course. But this type of criticism took aim at unspecified properties which were almost impossible to verify.

The final problem with this type of criticism is NOT that that we who have worked with or are working with NWP or ENS get upset or depressed, the problem is at the other side. In the same way as half a generation of meteorological weather forecasters in the period 1965-90 “missed the bus”, by their hostility to new techniques, so another half a generation did 1990-2015 and yet another one might for the coming 25 years.

T2mike spelled “forecaster” with capital “F”. It could be a typo, but it could also reflect an exaggeration of his profession. The conservative forecasters might end up as a “Forecaster” just as those popular and touristic “Stationmasters” , with capital “S”, on the platforms, while the real work and decisions are made elsewhere by highly qualified staff in the “Traffic Control Room”.