When does a model value become an observation?

Posted by @Hydrology_WSL (Massimiliano Zappa) on behalf also of @FPappenberger (Florian Pappenberger), @stagge_hydro (Jim Stagge) and @BteBrake (Bram te Brake). We acknowledge also tweets by @highlyanna (Anne Jefferson) and by @YoungHydrology.

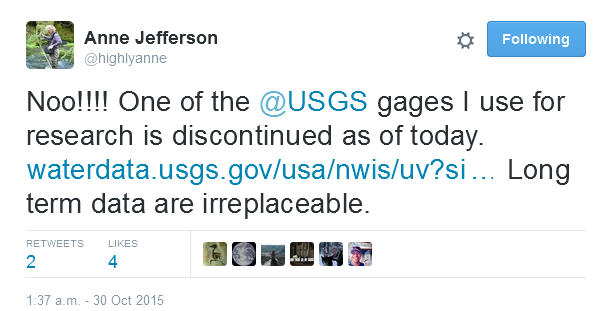

This blog post started out of the blue on October 30th 2015 after the twitter timeline of @Hydrology_WSL prompted a post by @highlyanne:

She is unfortunately not the only researcher (modeler) confronted with interruption of key “observations” or “sudden changes” in the quality of the “observations” they are using.

She is unfortunately not the only researcher (modeler) confronted with interruption of key “observations” or “sudden changes” in the quality of the “observations” they are using.

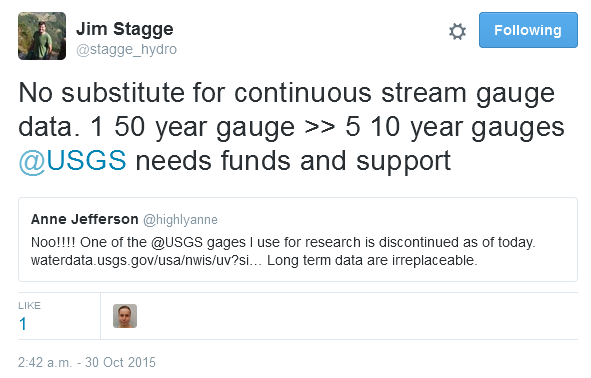

It was the reply of @stagge_hydro that motivated the first intervention of @Hydrology_WSL in the discussion.

It was the reply of @stagge_hydro that motivated the first intervention of @Hydrology_WSL in the discussion.

Long-term monitoring has immense value. This is particularly relevant today given the concern for gradual or sudden changes in our environment due to factors such as land development and a changing climate.

Long-term monitoring has immense value. This is particularly relevant today given the concern for gradual or sudden changes in our environment due to factors such as land development and a changing climate.

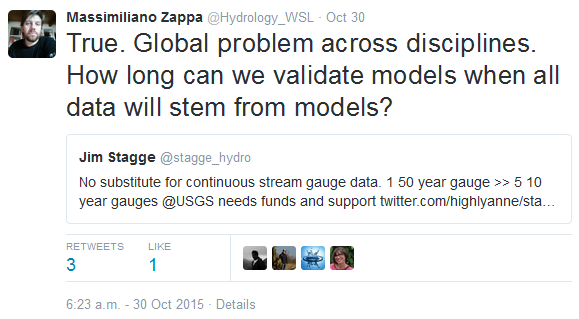

@Hydrology_WSL is in the very nice position to be in an organization aware of these problems (see here). There are plenty of long-term treasures across disciplines out of which we can always obtain very useful information for validating models, detecting trends or establishing test-beds. In times of decreasing funding, everybody is evaluating priorities and eventually deciding to discontinue some observations. Consequently, we see a constant decline of long established networks. Maintaining an experimental area or single measurement location is far more costly than maintaining a model code on the computer.

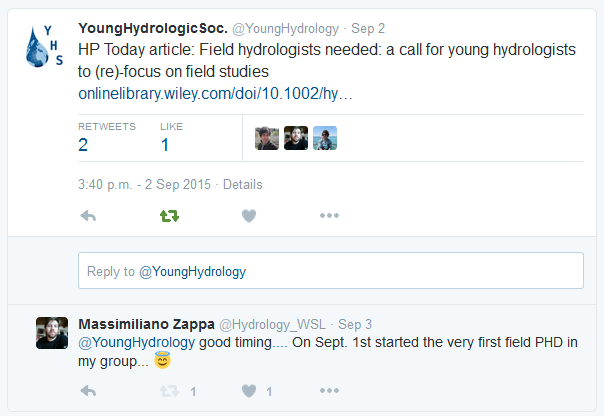

@Hydrology_WSL here shortly opens a door to another tweet posted by @YoungHydrology in early September:

Summarizing: less and less field data available for more and more publications focused on modeling (Vidon, 2015). By the way, if you don’t know it, @Hydrology_WSL used to be one of the driest hydrologist in the community but he is improving (see above the reply to the tweet of @YoungHydrology).

Summarizing: less and less field data available for more and more publications focused on modeling (Vidon, 2015). By the way, if you don’t know it, @Hydrology_WSL used to be one of the driest hydrologist in the community but he is improving (see above the reply to the tweet of @YoungHydrology).

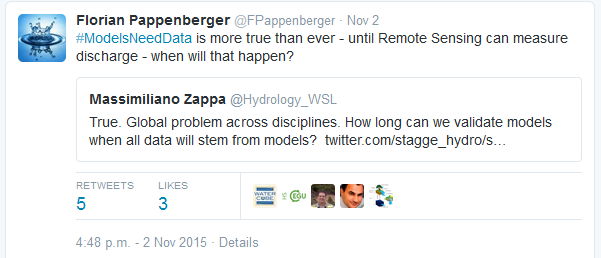

Back to the “discontinued observations timeline”, there was an intervention by @FPappenberger that elaborated on a possible future way to get observed discharge.

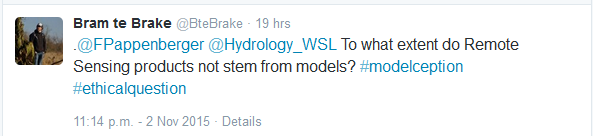

The virtual discussion had a decisive and highly interesting turning point when @BteBrake posted an #ethicalquestion:

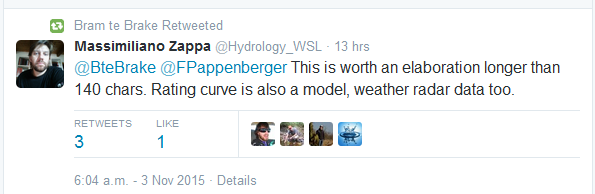

This triggered a quite interesting follow up chat that begun with a statement from @Hydrology_WSL:

This triggered a quite interesting follow up chat that begun with a statement from @Hydrology_WSL:

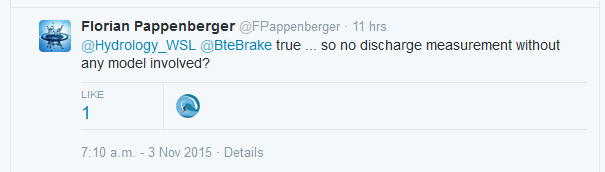

Later replied by @FPappenberger:

Later replied by @FPappenberger:

So now this blog and the comment box below it offer us and the readers of @hepexorg the opportunity to use more than 140 characters to discuss on this.

Beside the basic need of taking care of long-term measurements (see GCOS), we find the point where @FPappenberger and @Hydrology_WSL are confronted with the #ethicalquestion by @BteBrake really interesting. When using runoff data, remote sensing data and weather radar data, we generally speak about observations. Also, in the papers we write, they are often placed in the section “Data” and not in the section “Methods”. The #ethicalquestion obliges us to give a closer look at the source of the “data”.

“The observed discharge case”

We can rediscover that observed runoff data are basically an (empirical) model linking observed level and discharge. Numerous scientist published work on this specific topic under consideration of uncertainties (@FPappenberger too if @Hydrology_WSL well remembers). These findings are implemented in form of rating curves that our hydrometric services use to generate continuous discharge time series.

So, when does an observation become a model value?

This is quite straight forward. @stagge_hydro argues that the switch from “observations” and “modeled” data occurs when the data passes from the person taking the observation to a second user. During that hand-off, estimates of uncertainty are often lost, and the second user views a single value without error bounds as a true observation. All measurements have a degree of uncertainty due to sampling error (instrument, observer, etc). You then introduce additional uncertainty if you use an physical equation, rating curve, or model to convert measurements into a derived product. For instance, there is a certain amount of uncertainty in measuring river elevation due to instrumentation. You then use this to estimate river flow based on a rating curve that was developed by repeated sampling of the river. Uncertainty in the rating curve increases at both extremes because of extrapolation or relatively few observations. Most streamflow monitoring organizations keep track of uncertainty, but it often isn’t used in subsequent analysis, which in turn causes people to experience these derived values as observations, rather than modeled data. A case for @FPappenberger and Beven (2006).

So, when does a model value become an observation?

In this case, we think this happens when the “modeled” time series arrive into the database of the hydrometric service. From this point we can let us deliver it and use it to evaluate models. We can call this a case on inheritance of status from the observed water level to the obtained discharge. Maybe we also got used to the habit of calling certain variables observations (e.g. discharge) without much thinking whether they really are. This then often also leads to a ‘loss of memory’ meaning we are actually unable to reconstruct the original in situ measurement (e.g. water level) or any of the adjacent data and models needed (velocity profiles, rating curves etc).

“The weather radar case”

Here is the situation even more obvious. The “observed” weather radar fields we can use in our hydrological simulations inherit their status from the cloud of points delivered by the radar after each scan. @Hydrology_WSL close colleague Dr. Urs Germann from @MeteoSchweiz published in 2006 a really nice paper on the science (and models) that are needed to transform this data cloud into reflectivity, which than can be translated into millimeters QPE (quantitative precipitation ESTIMATES) and assumed in many hydrological application as “observed values”.

So, when does a model value become an observation?

In this case, @Hydrology_WSL thinks this happens when the time series arrives into the database of the meteorological service. From this point we can let it deliver to us it and use it to force models. Do you see the analogy to the above discharge case?

It is about 10 years now, that ensemble QPE are available (Germann et al., 2009; Liechti et al., 2013 and many other contributors). Since ensemble QPE “post-process” weather radar QPE they are now seen again as a model, an uncertainty model, perturbing observed QPE fields.

“Epilogue”

We presented here the discussion triggered by a tweet among millions of tweets that are posted every day. A small group of hydrologists elaborated this post addressing a quite relevant issue.

When does a model value become an observation?

We argue that as soon as “modeled” values of variables derived from monitored raw information (by the measurement device itself, or by the user processing the raw data) and later used to force or evaluate hydrological models, reach the form of a product that can be uploaded in a database of an official data provider, then this model value is “upgraded” and becomes an observation. More and more variables provided by weather services are linked either to model outputs or algorithms, or both. A simple example: observed relative humidity is a “model output” from an equation having air temperature and dew point temperature as an input (many approximations can be used for this as @Hydrology_WSL learned in the hydrometeorology lecture of Dr. Dietmar Grebner in 1996).

We guess we need some final words to start a discussion. We dare to post this statement: just like the weather radar community and the hydraulic community succeeded in convincing us that their data are observations, we should be able to find supporters that see our hydrological model outputs as observations. Not as physical field-measurements, but as valuable derived observations of water states and fluxes which support our research. Some steps in this direction are arising in studies using simulated discharge to asses climate impacts on vegetation, bedload and limnology (e.g.: Junker et al., 2015; Raymond-Pralong et al., 2015). Including discharge time series from models in the data section of papers (including appropriate references) would therefore be a realistic option and would by us been seen as good practice.

Discussion is open, feel free to use more than 140 characters.

References: here.

0 comments