Good practices in forecast uncertainty assessment and communication

Contributed by Lionel Berthet and Olivier Piotte

The French Flood Forecasting Service (SCHAPI) recently carried out a survey to identify good practices in the assessment and communication of forecast uncertainty.

The French Flood Forecasting Service (SCHAPI) recently carried out a survey to identify good practices in the assessment and communication of forecast uncertainty.

This was motivated by the fact that SCHAPI currently publishes hydrological warning maps (in French, “Carte de vigilance crues”) and makes available in real-time observed river levels and discharges (see here in real-time and post-event examples here), but intends to provide also hydrological forecasts issued by the local flood forecasting centres to the public in the near future.

SCHAPI forecasters acknowledge that, to publish forecasts on its website, uncertainties need to be first assessed and properly communicated. Special attention needs to be paid to the way this is achieved. Since there is no systematic national guidance for uncertainty assessment and communication so far, a framework to be shared by the national forecasters’ community needs to be built. These aspects are of special interest:

- Uncertainty understanding,

- Forecasting uncertainty assessment,

- Communication (in various situations).

To start building this framework, a survey on good practices in the assessment and communication of forecast uncertainty was prepared to be applied to a selection of similar public institutions that also provide warnings and forecasts to civil protection officers.

Results from this survey were presented during the session ‘Hydrology for decision-making: the value of forecasts, predictions, scenarios, outlooks and foresights‘ at the EGU 2014 Assembly in Vienna (see abstract here) and are summarized in this post.

The survey

The survey was a semi-closed frame which included:

- a unique questionnaire,

- open discussions with respondents, and

- a common analysis of the answers.

Various means for acquiring the information needed were adopted, including:

- Literature review,

- Face-to-face meetings,

- Phone interviews (mostly driven in English, French and German),

- Email discussions (questionnaire, answers, further discussions, etc.).

The survey questionnaire contained four main questions:

- Do you assess forecasting uncertainty? If so, which tools/models do you use?

- Do you publish water level or discharge forecasts? If so, are the forecasts completed with information about the associated uncertainty? Which representation do you use? (text, forecast interval, other…).

- Do you specifically communicate uncertainty information to civil authorities? If so, are they used with such information? Is this information welcomed? Used?

- Do you meet specific pitfalls in your working context? In uncertainty assessment? In uncertainty communication?

The survey was not intended to be a rigorous sociological study, but rather to be a ‘start point’ for better understanding current practices and implementing new tools for the French flood forecasting service.

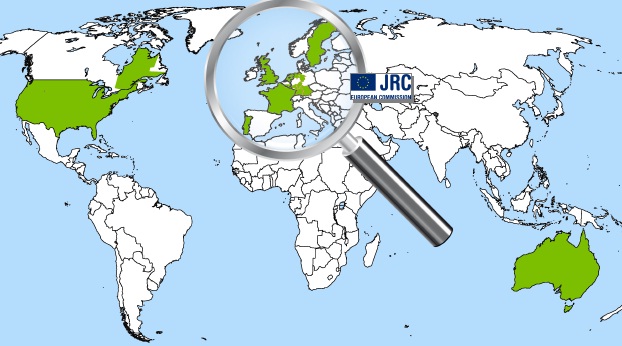

A total of 18 answers were collected, including public flood forecasting centres (several from the EFAS JRC network) and hydropower suppliers (EDF, CNR, Hydro-Quebec)

| Institution | Country | Function | Assessment |

| SMHI | Sweden | Public safety | Bibliography + Interview |

| Bureau of Meteorology | Australia | Public safety | Bibliography + Interview |

| National Weather Service (OHD & RFC) | USA | Public safety | Bibliography + Interview |

| INAG | Portugal | Public safety | Questionnaire |

| CEHQ | Canada (Québec) | Public safety | Interview |

| LUBW/HVZ | Germany / Baden-Württemberg | Public safety | Questionnaire |

| LFU/HND | Germany / Bayern | Public safety | Questionnaire |

| LUWG/HMZ | Germany / Rheinland-Pfalz | Public safety | Questionnaire |

| LUG/HWZ | Germany / Hessen | Public safety | Questionnaire |

| NLWKN / HWVZ | Germany / Niedersachen | Public safety | Questionnaire |

| MetOffice / Environment Agency & SEPA | United Kingdom | Public safety | Bibliography and interview |

| Rijkswaterstaat / VWM | Netherlands | Public safety | Questionnaire |

| Direction générale opérationnelle de la mobilité et des voies hydrauliques | Belgium / Wallonia | Public safety | Interview |

| JRC (EFAS) | European Union | Public safety | Bibliography and questionnaire |

| Météo-France / SHOM | France | Public safety (storm surge) | Interview |

| EDF | France | Hydropower | Bibliography and interview |

| CNR | France | Hydropower | interview |

| HydroQuebec | Canada (Québec) | Hydropower | interview |

Main results

- Do many services deal with uncertainties?

- Forecast uncertainty assessment: 89% (16 answers)

- Uncertainty publication (e.g., on the Internet): 94% (15 answers over 16)

- Specific communication to civil authorities: 50% (8 answers)

- A posteriori evaluation of uncertainty assessment at least: 25% (4 answers)

- Which uncertainty sources?

- Meteorology: always

- Hydrology / Hydraulics: often, but not always

- How do they deal with forecast uncertainty?

- Ensemble forecasts: 69% (11 answers)

- Meteorological multi-model: 44% (7 answers)

- Several meteorological scenarios: 38% (6 answers)

- Post-processing: 69% (11 answers)

- Hydrological/Hydraulic multi-model: 13% (2 answers)

- Controlled subjective analysis: 6% (1 answer)

- How is forecast uncertainty communicated?

- Quantiles: 87% (13 answers over 15)

- Text (literal comments): 38% (6 answers)

- Min/Max: 33% (5 answers)

- Over threshold probability: 33% (5 answers)

- Pessimistic scenario: 7% (1 answer)

- How is forecast uncertainty accepted? (open question)

- Highly variable: depending on the ‘uncertainty general culture’ of the countries;

- It is commonly acknowledged that we need a shared language with stakeholders to efficiently deal with uncertainty, with common trainings of forecasters and key stakeholders and formal messages prepared in common agreement (e.g., SMHI in Sweden, EA in UK and JRC at the European scale).

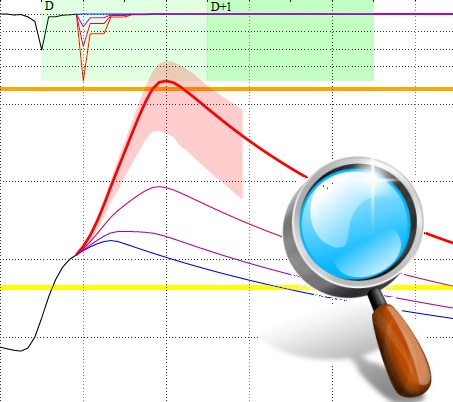

Different visualisations of hydrological forecast uncertainty encountered during the survey

Lessons learnt

- Most forecasting services deal with uncertainty, but there is no consensus on the method(s) to be used by operational services.

- Two methods are more commonly used: a large majority uses ensemble approaches and/or post-processing of deterministic forecasts.

- All services are aware of the need for a reliable assessment of the meteorological

uncertainty. - Uncertainty communication is recognized as valuable.

Additionally, this survey was also useful to ourselves: to help convincing our national forecasters colleagues and our stakeholders of the need of implementing pre-operational experiments on uncertainty quantification and of continue on training forecasters on communicating forecast uncertainty.

We thank all the forecasting services that answered our survey.

Additional comments are welcome here!

July 14, 2014 at 01:20

I would be particularly interested if anyone had examples of concise ways to communicate uncertain forecasts. If you want to be specific about a deterministic forecast you can just say “Maximum temperature will be 35 degrees C” but a probabilistic forecast could be “There is a 8 percent probability anomaly relative to the highest tercile of climatological precipitation over 1981-2000”, which is overwhelming to the casual user.

I’ve seen attempts at simplifying messages, e.g. the Bureau of Meteorology gives a range of likely precipitation (e.g. “2-4 mm rain tomorrow”) without ever talking about exceedence probabilities in products. But then the probability is ambiguous to the user. If you read the help file, you’ll find that they’re the “likely rainfall” (50% chance exceedence) and “possible higher rainfall” (25% chance of exceedence). However, because they provide the probability of any precipitation, you can imagine the strange situation of seeing “30% chance of rain / 0 mm amount”.

July 25, 2014 at 11:57

Thanks Tom. I would be interested as well in concise but precise communication. But I am afraid that we could not do better than standardized statements with a help page or help file (as the BoM or the IUGG do).

July 16, 2014 at 11:49

This is an interesting piece of work, do you intend to publish it? I’m sure there would be a lot of interest from all operational forecasting agencies in seeing the final report, and would be a valuable addition to the literature.

I’m particularly interested in the question on how forecast uncertainty is communicated. Judging by the results you have, I’m assuming the responses referred to communication to civil authorities rather than publishing it online?

July 25, 2014 at 12:05

Thanks for your interest. We did not intend to publish it but we expect to translate all interviews reports into English (some are written in French or German). We could publish the reports for the institutions which consent to.

Yes, most answers referred to communications to civil authorities. Only few countries developed a ‘full uncertainty-with’ communication and it is then much more difficult to build an objective view of the greater audience reception.

July 17, 2014 at 14:11

Very interesting work. Gives a nice photography of how the subject is being considered. As you said, it helps convincing forecasters and stakeholders about the need for implementing pre-operational experiments on uncertainty quantification. Congratulations.

July 18, 2014 at 09:29

Very interesting. However, this covers the general practices. The next step would be to make the same investigation in “alert” cases, when the different systems (ensemble systems, deterministic forecasts and statistical interpretations etc) and observational evidence (conventional observations, radar and satellite images etc) might give conflicting indications.