Keep the information flooding in – Social and mainstream media monitoring for improved disaster management

Contributed by Milan Kalas, Davide Muraro, Tomas Kliment, JuttaThielen and Florian Pappenberger

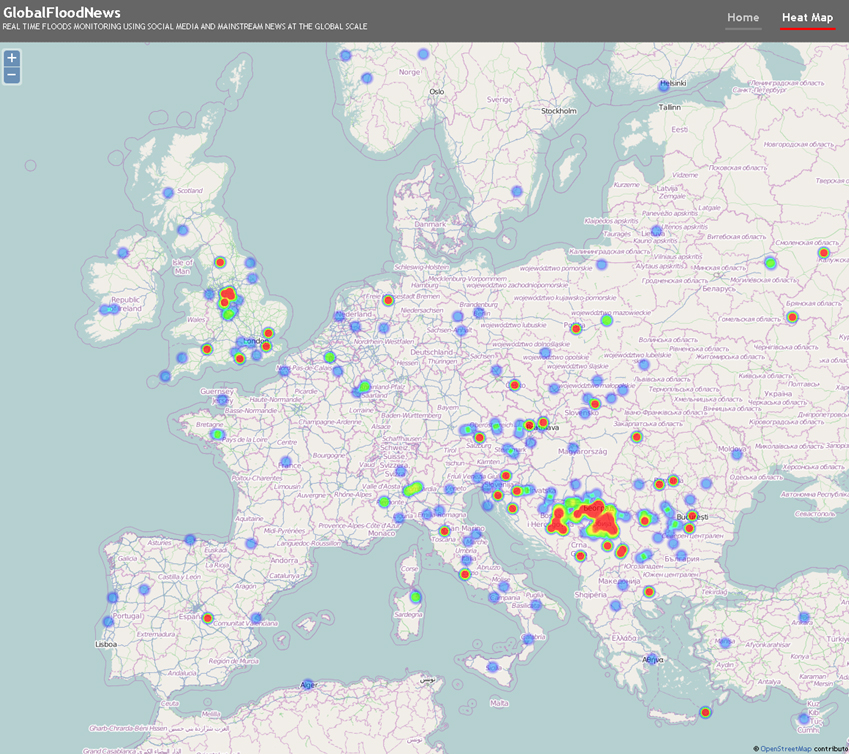

Several days of exceptionally intense and continued rainfalls from 13th May 2014 onwards caused disastrous flooding in the Balkan region (for details see this post)

The question is: how can the knowledge of the crowd be channeled to the right actors?

Flood keywords

Social and mainstream media monitoring is being more and more recognized as valuable source of information in disaster management and response. The information on ongoing disasters could be detected in very short time and the social media can bring additional information to traditional data feeds (ground, remote observation schemes).

Probably the biggest attempt to use the social media in the crisis management was the activation of the Digital Humanitarian Network by the United Nations Office for the Coordination of Humanitarian Affairs in response to Typhoon Yolanda. The network of volunteers performing rapid needs & damage assessment by tagging reports posted to social media which were then used by machine learning classifiers as a training set to automatically identify tweets referring to both urgent needs and offers of help.

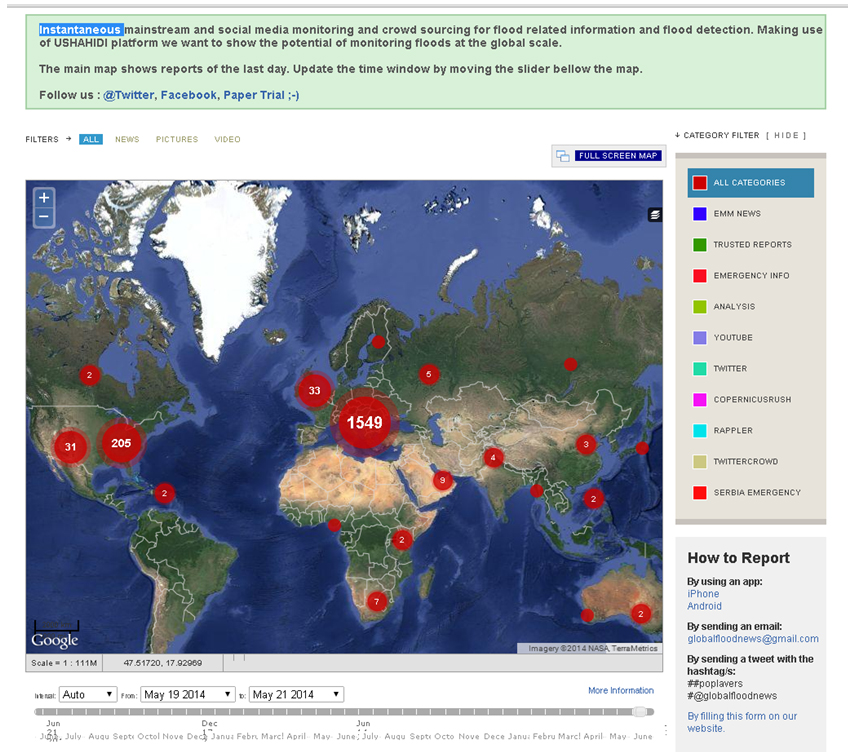

Similarly, during the Balkan floods, Serbian government is developing the system during the ongoing flood crisis. GlobalFloodNews offers similar functionalities as described before and it is running instantaneously at the global scale. At the time of major events (like the Yolanda or Balkan floods), we are configuring the system to focus on those and there are event-tailored categories added to the system.

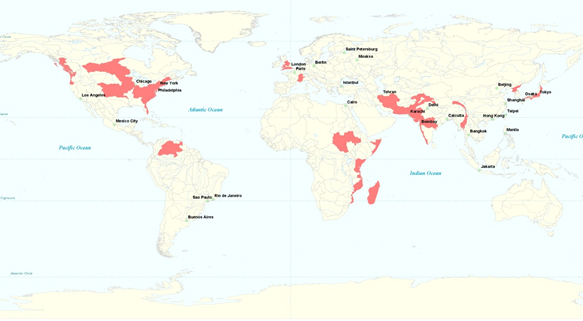

Flood Signal Regions

We employed the twitter streaming API in order to analyze all communication posted on the Twitter in real time, which contains one of the flood related keywords.

Reports which passed the keyword filter are submitted for further analysis, where the relevant information is automatically extracted using natural language and signal processing techniques. The keyword filters are adjusted and optimized automatically using machine learning algorithms as new reports are added to the system.

Another crucial task in the social stream processing is identification of the geographical location of the information. Only a small part of the overall communication on Twitter is provided with geo-location information, therefore it is necessary to derive the approximate location from the text.

In the current system, we are using free Yahoo geo-locator API. All relevant reports are stored in the database. Similarly, we are monitoring the mainstream news providers (including European Media Monitoring System of the European Commission) for the flood related news. News articles are geo-coded as well and stored in the database.

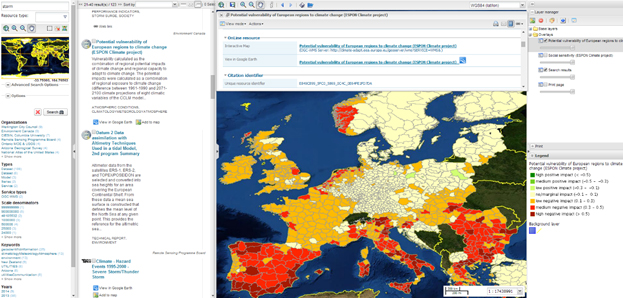

Geo WMS services

An important aspect of the social media analysis for crisis response is the event detection.

There are many attempts to use detection of anomalies and break points in time series of normalized flood signal derived from the social media published in the literature lately.

There are two event detection methods proposed in the system:

- One is the event detection algorithm based on the peak detection in the time series of normalized relevant tweet counts.

- The second approach would be triggering the social media analysis on the event coming from the flood forecasting system. The flood forecasting system will in this case launch a specific, event tailored streaming. Prior knowledge of the geographical domain will allow focusing the social media searches on limited geographical extend, using local language and local geographic names etc.

The flood signal derived from social media streaming can be fed back to the forecasting system to evaluate the performance of the forecast, in combination with ground and remote observations used for better and more accurate estimation of the damage etc.

GFN Interface

In the future, we intend to couple the flood forecasting system, social media and news monitoring application and geo-catalogue of the OGC services discovered in the Google Search Engine (WMS, WFS, WCS, etc.) to provide full suite of information available to crisis management centers as fast as possible.

Forecasting system will be triggering an event-based search on the social media and OGC services and relevant for crisis response (population distribution, critical infrastructure, hospitals etc.).

In case the flood signal coming from the forecast gets confirmed by the social media, information collection can be updated in real-time with live data crowd sourced from the social media (needs, damage, etc.).

The current version of the system makes use of USHAHIDI Crowdmap platform, which is designed to easily crowdsource information using multiple channels, including SMS, email, Twitter and the web we want to show the potential of monitoring floods at the global scale.

Mapping front-end is being updated with the information from social and mainstream media monitoring in real time. Registered “reporters” are allowed to easily submit reports on floods using simple and user-friendly web-from, email or SMS.

Flood signal Heatmaps.

Users can browse the information provided in form of maps, heat maps and text report. Select events by geographic regions, time and type.

June 12, 2014 at 09:07

http://www.globalfloodsystem.com

June 20, 2014 at 14:02

Geocatalogue of OGC resources discovered on Google available here: http://adamassoft.it/klimeto/geonetwork . More than 10k OGC services and 200k datasets discoverable.